Artist Pipeline Walkthrough

Its the age old question isnt it – how to best organize all your data, projects, sketches, code snippets and personal work. For years I have carried around a cadre of USB drives (with only 1 using RAID) as well as all the online model purchases ive made over the course of my career. Lots of that data is irreplaceable now and for a good long while i’d been looking for way to consolidate everything into a streamlined (and easily backed up) format that emulated the industry style data organization I am used too. I’d tried a bunch of different approaches but this year, finally hit upon a solution that works great for me. In the spirit of hopefully saving someone else the tedium of trying to put this all together, i’ll have a handful of articles to come that give an overview of this process.

Goal

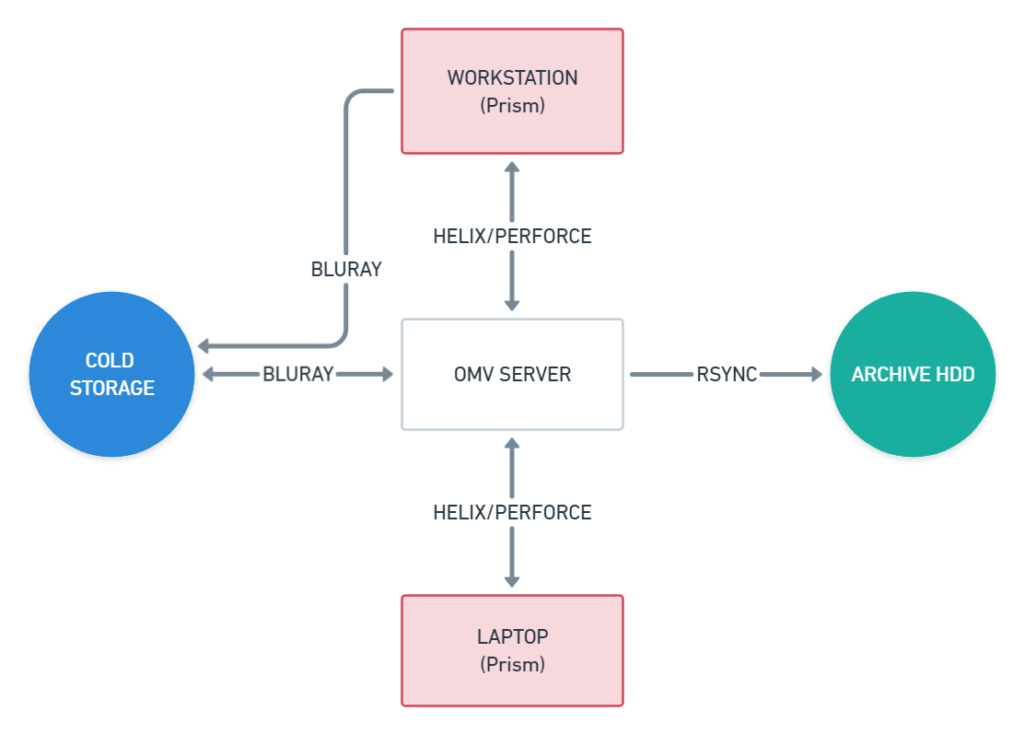

So what specifically am I talking about when I refer to my “pipeline”. Essentially a way of organising complex collections of data in a standardized format that can then be easily sync’d to another device capable of providing network access to this data for free. Typically in most facilities, you work in a %JOB% context with different asset types defined as part of an asset management system with custom hooks unique to that facility. This is fantastic for multi-user environments but often overkill for the home artist. So when I started this process, I broke down my requirements into 3 distinct stages;

#1 – A local / workstation based front end that could organise project data in a logical fashion without requiring any manual file management whatsoever. I wanted to be able to hit save in DCC software and know files were saving with correct naming conventions, in a standard location.

#2 – A way of syncronising workstation data to a NAS / External server for central access as well as backup

#3 – A way of backing all this data up for cold storage.

Those 3 steps mean that I can create asset libraries, motion capture libraries, sketches, learning and all the other self directed tasks once, have them accessible from any device and be able to keep them, ad infinitum on a backup that will not decay.

Overview

The first part of this process comes in the form of the excellent Prism Pipeline by Richard Frangenberg. I’d become aware of his toolset after seeing his fantastic presentation at FMX2021 with regards to USD integration in Prism. After seeing such an elegant use of standard workflows in this presentation, I downloaded a copy of Prism and got to testing it. From the get go, Prism provides such a user friendly experience that I was sold on it immediately and the extendability means that as my needs grow, so can this pipeline.

The second part of this process, I decided to use Perforce/Helix. Initially because of my familiarity with it and its integration with Motion Builder and Unreal but also because as a versioning system, its very fast and easy to navigate. Simply put, since Prism sets up a project structure that contains everything, synchronizing these projects with Perforce makes for a fabulously simple archival/backup process with the added benefits that come with versioning. The server I have Helix/Perforce on is a HP Microserver with 4x8TB drives in RAID5 giving me a combined storage of 24TB which I chose to bring me under the 25TB limit of most reasonably priced BD-RE disks (single layer disks). The server is running a stock version of the excellent Open Media Vault which is based on my favourite linux distro, Debian. Installing Helix/Perforce on this server is as easy as running apt-get after adding perforce to your APT config.

Finally, for offline archival, I have a WD MyCloud 24TB drive which I can plug directly into the Microcenter and Rsync the RAID array straight to it. This also means I have a step between cold storage and live data where, if I ever remove data from the RAID, I can always access it separately either via the server or by directly mounting to my workstation. Finally, I have a Blu-Ray burner in my workstation and an external bluray writer for my server to do cold storage backups for offsite storage. Why so cautious I hear you ask? I have never liked the idea that in one event I may loose everything, even if that event is highly unlikely. Having an offsite cold backup of my data means if something happens to my home, I dont loose anything.

Conclusion

This is a basic walkthrough of my thought processes and overall setup of my home pipeline, in upcomming posts I’ll cover each section in a little more detail, especially the setting up of OpenMediaVault, given its reliance on Linux knowledge. But know that you can build a fileserver with any hardware at all, you dont have to go with a prebuilt server, I only chose to go that way because of hardware RAID, size of the enclosure and the company was practically giving them away on eBay.

If you have any questions or would like a follow up, please do not hesitate to reach out on my contact page. Thanks.