Realtime Mocap Streaming In Unity

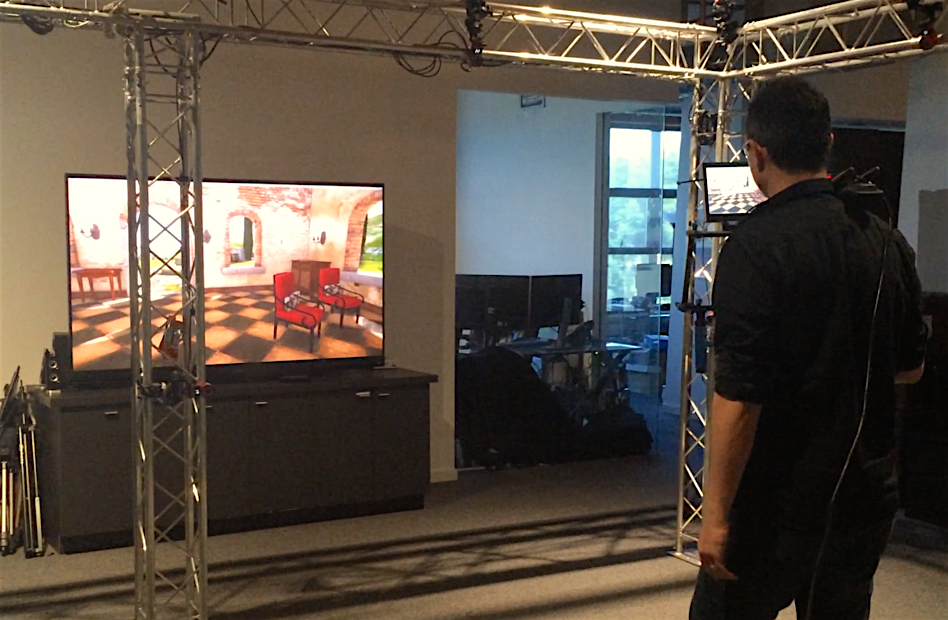

Traditionally, realtime virtual production has taken place within Motionbuilder, the industry standard for capturing motion capture. However, with the advent and surge in popularity of realtime game engines, I had the opportunity to begin developing realtime production solutions within Unity for use either during my role as Motion Capture supervisor at Scanline VFX or for my own personal VR projects.

I was particularly inspired by what ILMxLab had acheived using similar processes and I set out to develop a pipeline that could support a typical virtual production workflow but utilise the power of Unity to drastically improve the visuals against what is possible inside of Motionbuilder.

At the time, there was only a very basic UDP client that could stream only rigid bodies to Unity, provided by Naturalpoint, the manufacturers of the Optitrack motion capture stage I was using. Taking this initial code as well as some of the code from Bradley Neumann at USC World Building lab, I was able to get started with streaming this data from Motive (Motion Capture Software) to Unity directly.

In this example we’re using the stock RBD streaming code from Optitrack with the updated Unity Body.cs and Stream.cs from Bradley to stream the correct body type. Then I perform a simple retartget inside of Unity onto a default skeleton. Combining this workflow with an Oculus provides a framework for full body VR solutions.

Subsequently, collaborating with both Bradley and other members of the Optitrack community, we developed an updated plugin for streaming multiple groups of rigid bodies and skeletons. Thus providing an initial framework for us to begin building our virtual production solution within Unity.

Links

Github Code ; https://github.com/DanWuh/Motive2Unity

Motive Forums; https://forums.naturalpoint.com/viewtopic.php?f=59&t=12988&start=0